|

[November 16th 2005]

Electro Drummer by Chico MacMurtrie (http://amorphicrobotworks.org/). Photo by Doug Adesko.

Intelligent Tools in Music

In this article by Thor Magnusson we are introduced to the role of computational intelligence in the composition of music. The author asks what benefits a computer brings to the musician, how the computer can contribute to the creative process, and if a computer can be creative at all? Thor Magnusson is an Icelandic musician and programmer working in the fields of music and generative art. He is co-founder of the ixi software collective (www.ixi-software.net). He is currently based in Brighton where he is doing research in artificial intelligence, human-computer interaction and audio programming.

Computers and creativity

Computational intelligence has developed fast over the last half century. Although we are nowhere near the prophecies made some 20 years ago, technologies from fields such as Artificial Intelligence and Neural Networks are being used successfully in many different areas where complex control and adaptive systems are necessary. From early on, people have been using these technologies in the fields of art, especially music, with interesting results. Software such as Emmy (the name derived from EMI - Experiments in Musical Intelligence) by David Cope has proved able to compose music in the styles of composers such as Bach or Mozart with results that fool music specialists. Emmy has shown that artificial intelligence can be used to compose music, but how interesting are the results? Are they innovative, expressive and personal? The answers to these questions are bound to be very subjective and difficult to discuss. In addition to asking if computers can compose new and interesting music, perhaps we should also ask ourselves why we want this to happen. From where does the desire arise to make a computer compose a musical piece? Is it the ultimate challenge in computing to make intelligent systems that make profound art? Or would such music (if interesting) be the final victory in man's desire to create the Golem or self-conscious android.

The aim is not to address those philosophical questions in this article, but rather investigate the question: what benefits computers bring to the musician? What Cope's Emmy does is to analyse music by a chosen composer, detect the rules that the composer is using and then generate similar music according to those rules. This is done by using techniques in data encoding, melodic and harmonic analysis, tempo signature analysis and pattern matching. Much of the material Emmy produces goes directly into the bin according to Cope, although some of it is remarkably similar to compositions by Bach, Beethoven or Mozart. But... there is always the big BUT...

LEMUR (League of Electronic Musical Urban Robots) Guitarbot. Photo by Aaron Meyers.

In her book from 1991, The Creative Mind, Margaret Boden defines three areas of creativity: combinational, exploratory and transformative creativity. Combinational creativity involves making unfamiliar combinations of familiar ideas. The artist (or a computer for that sake) manipulates cultural symbols and represents them in a new work. The second area, exploratory creativity, involves exploration of a conceptual space. The rules of the art form are defined and the artist explores what can be done within the frame set of those rules. Finally, transformative creativity happens when the artist reaches the limitations of the style he/she is working within and breaks out of that paradigm. It involves transcending the conceptual space - an unthinkable thought - and into an unknown area. Such a transformation of the form happens, according to Boden, in the works of such artists as Bach, Picasso, Joyce etc. (we will not go into the psycho-sociological question here as to whether such artists are merely symptoms of cultural changes, the tip of the iceberg, or unique personalities with vision that reaches beyond others').

Software such as Emmy can be said to be explorative - it explores the area of compositional rules laid out by the composers it has analysed - but it can hardly be said to be transformative. How could a computer ever perform transformative creativity? Randomness, genetic algorithms and self-reflecting agents are not capable of bending stylistic forms in a way relevant to human culture. True transformations in culture, and artistic styles in particular, happen in real, lived, social situations in which the artist finds him or herself, depending on innumerable parameters. Everything becomes a factor: politics, weather, housing, stress levels, social tension, other artistic trends, fashion and many other wild cards which the computer is obviously oblivious to. Computers can therefore, according to this reasoning, hardly be creative in the transformational sense. They do not exist in an embodied, lived situation as beings-in-the-world, to paraphrase Heidegger, but rather as isolated, minor brains with highly limited sensory input. They can however be good tools to use in explorative creativity, where the rules of the art have already been defined by the composer or the musician, and where the task of the computer is to explore the possible expressive space of those rules and generate material according to them. Emmy is a good example of such usage.

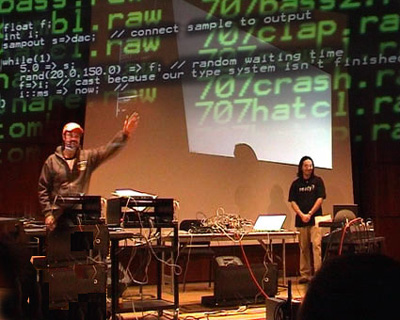

The creators of ChucK, Perry Cook and Ge Wang perform live coding. ChucK is an audio programming language for real-time synthesis, composition, and performance. http://chuck.cs.princeton.edu/

Tools and instruments

At present, it's hard to imagine a truly creative computer in the transformational sense. But who knows - after all, scientists are developing artificial skin, computer vision, adaptive and evolutionary robots, carbon chips, artificial neural networks and sophisticated techniques in machine learning. It's just that it's not possible to investigate this question properly at the moment outside fields such as science fiction or futurology.

Having said this does not imply that the computer can't be used in interesting ways in countless musical situations. There are various areas where the power of the computer can be applied in the arts and particularly music. Music and computing have been intertwined from the start and these two mathematically sophisticated fields have always inspired each other. At first, people were doing experiments in electronic music in laboratories such as Bell Labs, in universities and institutions. In the 1950s Max Matthews created the Music N languages and most other music languages have derived from them, becoming increasingly popular with composers and musicians with the advent of the personal computer.

The music industry is a strong business and very quickly (in the 1980s) it was clear that developing and marketing computer music software for personal computers was highly profitable (as opposed to the free software of earlier experiments). The most popular approach of the commercial companies was to provide packages that tried to copy the physical recording studio into the screen-space of the computer. Due to limited processing power of computers, proper digital signal processing needed for sound was not possible, so most commercial music software was in the form of MIDI sequencers (where music is organised linearly as tracks on a tape or lines in musical score). This software was not aimed at programmers, but musicians and it tried to represent the physical working environment of the musician in the digital realm of the computer.

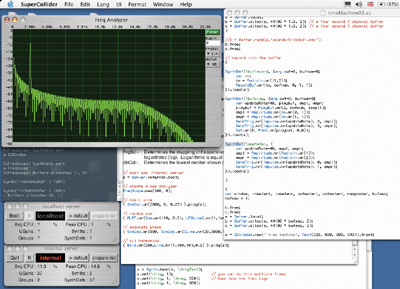

Screenshot of the audio programming language SuperCollider. supercollider.sourceforge.net.

Things have changed rapidly in this field since the 80s. Personal computers are ubiquitous and powerful enough to record, manipulate or synthesise real audio at professional sampling rates and young people are far from intimidated by programming a computer. It has become almost a natural activity. Thus, we are witnessing the increasing popularity of audio programming languages such as Max/MSP, CSound, Pure Data, SuperCollider and ChucK. Most of these languages are high-level, open source and free or at least extendible by creating one's own externals (additions to the language) or lower level code for audio manipulation. The fascination people have with programming their own music software and music can be explained by referring to the straightjacket feeling many musicians have expressed when working with commercial software. It is as if the designers and programmers behind the software are imposing their own ideas about music and how the musical work-process should take place.

We're at a situation now where audio programming is widespread and individuals are developing, independently or as part of the universities, tools and instruments using the above mentioned languages, and many others, as their primary compositional and performative tools. Ingenious research is being done in areas such as synthesis, physical modelling, pitch and tempo recognition, pattern recognition and physical and graphic interfaces for musical applications. It is here at this level, where the computer is seen primarily as an instrument, that techniques found in artificial intelligence can be used in the most practical way. Intelligent systems can adapt to the musician, interpret and refine his or her actions and suggest new ways according to rule-sets that the musician defines. These rules can have various degrees of randomness or algorithmic indetermination, thus potentially opening up unlikely areas of thought or compositional patterns.

Embodiment and human-machine relations

Although the field of computer music is boiling with creativity and inventiveness at the moment, it is still faced with a dire problem of embodiment. The problem is twofold: from the audience side, some feel it is frustrating not knowing what the musician is doing when he/she is sitting behind that screen, a problem which has resulted in the 'VJ solution', where the music is accompanied by computer generated visuals. This is not a real solution and it is rarely satisfactory. From the performer's side, it can be frustrating to lack the physicality of acoustic instruments, where a trained mind-body combo plays the instrument with subtlety that is hard to achieve on the computer. This is a temporary situation. Technology is taking us fast beyond the simple mouse-keyboard-screen input-output model and into much richer multisensory and multifeedback mechanisms. Sensor and feedback technologies are making it possible for a musician to use his or her body to control audio synthesis or musical patterns with the movement of the body in very sophisticated ways and software such as the programming languages already mentioned have already developed ways to communicate with such devices.

Atau Tanaka plays at the NIME 2002 festival. Photography: Michael Lyons.

It is here that some of the powers of the computational intelligence become highly relevant and interesting. The question of how to map human gestures into musical signals is one of the most important questions in this human-machine relationship, a question that is not easily solved, nor answered with a single solution. Virtuosity on a digital instrument is hardly possible in the sense we are used to with acoustic instruments. Digital instruments are dynamic in nature and a changed variable in a program might change the behaviour of the whole physical instrument and the musician would have to re-train him or herself according to the change. Another question, to be studied somewhere else, would be how the music technology industry will solve these problems and try to market devices and software which respond to these new needs expressed by composers and musicians of various musical traditions and styles. I believe that we will see some ingenious use of Artificial Intelligence in the space between the gestural hardware and the digital sound engines. AI can be used in the mapping process, where the instrument might adapt, evolve or change itself according to how the musician plays and the instructions he or she sets. Furthermore, this kind of intelligence has to be set up and controlled by the individual musician resulting in more heterogeneous music as each system is unique and not conditioned by predefined settings. The systems of tomorrow are individualised, open and easily controlled, and hopefully the music will be coloured by this fact.

For further information check:

- NIME - www.nime.org

- ixi software - www.ixi-software.net

- SuperCollider - supercollider.sourceforge.net

- Max/MSP - www.cycling74.com

- ChucK - chuck.cs.princeton.edu

- Pure Data - www.pure-data.org

- CSound - www.csounds.com

- David Cope - arts.ucsc.edu/faculty/cope/

- Margaret Boden - The Creative Mind: Myths and Mechanisms, London: Wiedenfield and Nicholson. 1990.

|

|

|